Edit 1: 2024.10.30

Edit 2: 2024.11.13

Basic RAG

General workflow

- During development

- Collect knowledge base (raw, unprocessed files)

- Load the knowlege base into documents by processing them

- Cut up documents into different chunks (snippets)

- Embed each chunk and vectorize it

- Assign metadata to chunks to enable filtering afterwards

- Store the vectors into a vector database

- During usage

- User input query

- Query is embedded using the same embedding model as in development

- “Similar” documents to the query in vector database is retrieved by comparing their similarity in vector space

- Retrived documents are combined with user’s original query as context, and this combination is fed into LLM. LLM itself does not change at all.

- LLM gives answer based on that enhanced query

Why bother

- Private domain knowledge is not known by LLMs when they are being trained

- LLMs can hallucinate and output contents that are absolutely horrendous

Detailed steps

Chunks

- Why bother segmenting document into chunks

- Embedding length is fixed for EVERY input, the embedding for the whole word file is of the same length as a sentence in it

- Exactly how embedding works is in next step

- chunk size is a hyperparameter

- Embedding length is fixed for EVERY input, the embedding for the whole word file is of the same length as a sentence in it

- Retrieval of chunks

- Indexing of chunks

- Index is needed to enable optimised storage and retrieval of vectors

- Types

- Vector store index: the most straightforward index, used in faiss

- Hierarchical index

- Use 2 indices, one for the actual document chunks, one for the summary of chunks

- When searching, first find the most relevant summary chunk, then find document chunk in the scope of docs that are parts of that summary chunk

- Hypothetical Questions

- Ask LLM to generate a question based on each chunk

- Embed those questions

- When a query comes, retrieve similar questions and their original text chunk.

- This can be beneficial because the generated question and the actual question have high semantic similarity

- Reverse this logic and you get another approach called HyDE

- Context enrichment

- Basically also retrieve things that are related to the chunk too

- Types

- Sentence window retrieval

- Retrieve a chunk and the surrounding sentences before and after that chunk

- Auto-merging retriever

- Retrieve a chunk, and also retrieve its brothers & sisters in parent chunk if they are not retrieved already

- Sentence window retrieval

- Fusion retrieval

- Retrieve twice, once with vector shits and another with keyword-based shits

- Combine them together and rank them by using a Reciprocal Rank Fusion algorithm, and output

- Indexing of chunks

- Ranking and filtering or other transformations on retrieved chunks using postprocessors provided in Llamaindex or Langchain

Embedding, Vector database

- Use embedding engines (i.e. Transformer based models) to transform sentences or other elements in a document into vectors

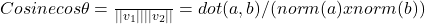

- The similarity of vectors is assessed by calculating their distances

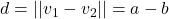

- Euclidian

- Euclidian

- Embedding is sort of like the how a topic, a sentence, a phrase etc. is being understood, and that understanding of natural language is manifested by using vectors. A closer distance indicates that they have a closer relationship in the context of natural language.

- The distance between “国际争端“ and ”美苏冷战,全球争霸,对人类社会影响深远“ is closer to that to “我国首次在空间站开展舱外辐射生物学暴露实验”

- Those vectors are stored in a sort of vector database to enable a faster storage and retreival

- Functions of a vector database lib:

- Initialization of a vector database (number of dimension, what distance to be used)

- Adding new EMBEDDED documents

- When a embedded query comes in, retrieve top-k similar documents

- In some implementations an ID for each doc is needed

- Vectors can be stored in disk or in RAM depending on the implementation

- Functions of a vector database lib:

Prompt engineering

- The user’s query is vectorized using an embedding engine of same parameter

- An example template for the query for LLM is used:

- “Given , please answer “

- is retrieved information

- is user’s original query

Agents

Overview

- Elements in an high-level Agent that make use of LLM as its central mind:

- Planning

- Reflection

- Self-critics

- Chain of Thoughts

- Subgoal decomposition

- Memory

- Short term memory: Patterns, reasoning methods etc. that are learned via prompt engineering

- Long term memory: Vector database, which is also where RAG retrieves information from

- Tool (external API) use

- Calculator, Code Interpreter, Search, …

- Information retreival tools on Vector DB

- Vector DB can store internal information that cannot be accessed publicly via Search tool

- Other sub-agents

- …

- Action

- Planning

Planning

- Task decomposition Break a single, complicated task into multiple smaller, managable steps to utilize LLM’s strength. This can be done by (1) explicitly instructing LLM to think by steps, (2) use task-specific instructions, like writing an outline first when writing a novel, (3) with human inputs.

- Chain of thought: step by step

- Tree of thoughts: extended version of CoT by exploring multiple reasoning possibilities at each step. At each step, the possibilities are evaluated by a classifier or popular vote

- LLM+P

- Self-reflection Make LLMs feel insecure

- ReAct:

- Reasoning + Action

- Think about what to do, do that thing, observe the output and think about what to do next, repeat

- Reflexion:

- Make LLM to reflect on its own output and take actions if something fishy like hallucination happens

- Chain of Hindsight:

- Make LLM think of its past traumas i.e. hindsight and encorporate it into its current output process

- ReAct:

Memory

See Embedding, Vector database part

- Formally, that method is called Maximum Inner Product Search (MIPS). A way to speed up MIPS is to use Approximate Nearest Neighbors (ANN) to rule out a large number of far vectors, this bring accuracy down a bit but the speedup is huge

- FAISS is an ANN method, along side with other methods like LSH, ANNOY, HNSW, ScaNN

Tool use

Tools are essentially python functions, but instead of explicitly define when to use them, LLM use its semantic capabilities to figure out when to use them

Say that you have two tools, one is to validate user’s input via a pydantic class to parse the input into 4 fields, another is to use that pydantic object and precisely filter sommething out from a vector database

Comments are closed